The Beginner’s Handbook to Robot txt

The robots.txt is the only file where its size doesn’t matter! It may be tiny, but it has big implications for your website and can impact your ranking considerably. Understanding what this file stands for and why you need to update it properly is a crucial aspect of technical SEO, so don’t miss out on this one! In this blog, you will learn all there is to know about this amazing file that lives (rent-free) on your site.

Key Takeaways

- txt files live at the root of your domain name.

- txt files allow you to restrict search engine crawlers’ reach on your website.

- This file can be used per the requirement to give directives of partial or full access.

- txt file can assist in managing your ‘crawl budget’.

What Exactly is a Robots.txt file?

Robots.txt file actually lives on your website, you can easily find it at: ‘example.com/robot.txt’.

So, if you’re curious about whether or not you have a robots.txt file, just go over to your site, add /robots.txt to your domain name and voila! There it is.

What exactly does the robot txt file do? Well, it tells Googlebot robots whether or not to crawl your website. It makes recommendations to the search bots who are crawling your webpage. This file tells the search engine Googlebot robots where they can crawl and where they can’t. It allows you to block all portions of your website or index the website. With this file, you can even block certain pages from being crawled.

However, a robots.txt file cannot absolutely guarantee that Googlebot robots won’t crawl an excluded page because it is a voluntary system. Though it is rare for major search engine bots to disobey directives, bad crawl robots, such as spambots, malware etc., are not exactly famous for being obedient. These bots can still ignore the directives and crawl the restricted page.

Another interesting thing about a robots.txt file is that it is publicly available, meaning anyone can access it. We already specified its exact location, but to refresh your memory: Adding a /robots.txt can lead you right to it. Since it is publicly accessible, we recommend not including any files or folders which contain business-critical information.

When Googlebot robots txt interpret directions in the robots.txt file, they receive one of three instructions:

- Partial access: Individual elements of the site can be crawled for partial access.

- Full access: Crawling everything is possible.

- Full denial: Robots are not allowed to crawl anything.

But, is Robots.txt Required?

Now that we are familiar with the concept of a robot txt file let us tell you the importance of having one. It is recommended by Google to ensure that your website has a robots.txt file. Therefore, if Google and other crawlers can’t find it, there is a chance that they might not crawl your website at all.

They are important because they can help manage crawler activities on your website. This is done so that they don’t overwork your index pages or websites, which are restricted for public viewing. For your better understanding, we have made a list of the reasons why you need to have a robots.txt file.

1) Hide Duplicate and Non-Public Pages: One of the primary benefits of a robots.txt file is that it helps in blocking certain pages or files from being crawled. You won’t need every page on your website to rank; this is where a robots txt file can help you block them from Googlebot robots crawler. Some examples of these pages are login pages, duplicate pages, internal search results pages etc.

2) Hide Miscellaneous Resources: In some cases, website owners might want to hide certain resources, like PDFs, videos etc., from search results. In such a case, using a robots.txt file is one of the best to prevent them from being indexed.

3) Utilize Crawl Budget: Crawl budget can be described as the number of pages a search engine will crawl at any given time. A crawl budget is important because it helps you ensure that your number of index pages does not exceed your crawl budget. A robot txt file can help you optimize your crawl budget by blocking unwanted pages from being indexed.

Finding the Robot.txt File

As we said before, the Robot txt file lives on your website itself. Its size has been specified at 500 KB by Google. Hence it does not take up a lot of space. You can check out this file for any website by adding: ‘xyz.com/robots.txt ‘.

One important thing to know about this file is that it should always live at the root of your domain. If, in case, it lives anywhere else, then the search engine crawlers will not find it and automatically assume you don’t have one.

What Does a Robot.txt File Look Like?

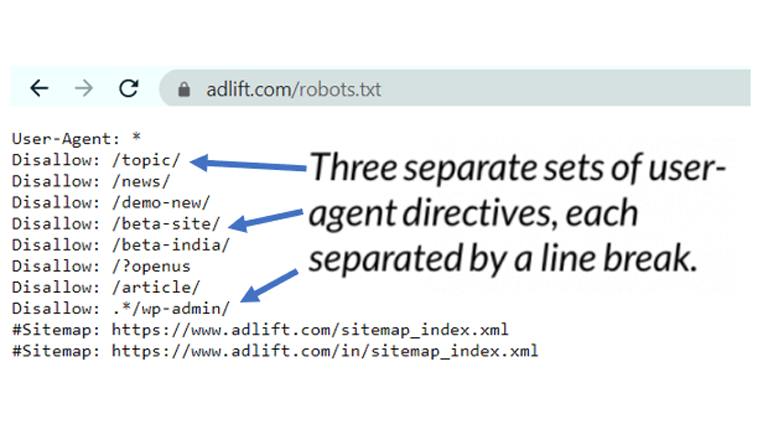

A robot txt file consists of one or multiple blocks of directives, where each one is specified as a ‘user-agent’. It also consists of a simple allow or disallow button. This is what it looks like:

There are two common directives in the robots.txt file. They are user agents and disallowed.

-

- User-agent: Every block of directive starts with a user agent which directs the crawler being addressed. For instance, if you want to tell a Googlebot not to crawl a certain page, then your directive will begin like this:

User-agent:*

Disallow: /directory-name/

- Disallow: The second most common directive in a robot txt file is disallow rule. It specifies the folder or sometimes the entire directory which is to be excluded from crawling.

What Issues Can Robots.txt Cause?

A small mistake in a robot txt file can have some detrimental effects on your website. But it’s not the end of the world! There’s nothing a little bit of ‘attention to detail’ can’t fix. Here are some mistakes that should be avoided with robotstxt files

- Blocking the Entire Site: It sounds silly, but it does happen. Web developers block one section of a site while working on it but then forget to unblock it once finished. This affects the website’s rankings considerably. Therefore, the next time you block one section, ensure you unblock it after the site goes live.

- Omitting Previously Indexed Pages: A word of caution: don’t block pages which are already indexed. This is because the indexed page will get stuck in Google’s index. To remove them from the index, add a meta robots “noindex” tag to the sites themselves and allow Google to crawl and analyze that.

Conclusion

Understanding robots txt files is not an easy feat. Whatever we have covered in this blog is just the tip of the iceberg. To fully comprehend how important this seemingly small file is, we recommend getting in touch with us at AdLift. We have years of experience when it comes to digital marketing, and we never miss out on small details like the correct updating of robots.txt files.